Modern technology allows to create orchestra without humans [Kurzweil]. Synergy of automated music generation and computer animation is possible but to my knowledge it has been not investigated in a theater. We will be able to create realistic agents such as giants, angels, dwarfs and sirenes. Computer robot animation will soon allow to create effects that will far exceed what is now understood by realism. Surrealistic and Superrealistic world of future robot theaters will be fascinating to humans. Unimaginable reality will happen and will be understood. We will be able to create figures from smoke and fire, to project moving light images on mist.

Intelligent robots and automated theatres will have an unlimited potential to tell even most unbelivable stories with a total freedom of artistic expression. Public will freely interact with robots in non-predictable scenarios. New forms of art will emerge that will be far more engaging than theatre or cinema. Some artists speculate that robotization will bring a new kind of mystery so characteristic to, for instance, mystery plays or puppet theatre. In contrast to film animations where the animation effects cannot be observed in real matter and real time, or Disney-like theme parks where the animation is totally programmed and separated from the audience, new robotic theaters will allow for the total interaction and communication with the public. So, the barrier between the humans and the robot-actors will become blurred and will finally disappear (in the theater). The influence of this new art form on children is now hard to predict, but so far, the early experience shows that ``everybody loves robots'', and especially ``children love robots''. Creators of this new art form must thus act very responsibly.

But, this is a long term perspective. Let us concentrate on the few coming years.

In our theatre, robots will be taught and introduced to movements/behaviors by humans who will tele-remotely act as these robots playing roles of humans, animals, angels, and devils. They will teach them to speak, pronounce, move, perform, act, behave and learn. The Machine Learning and evolutionary techniques of both supervised and unsupervised learning will be used. They will be partially realized in hardware (FPGA/microcontroller parallel systems), to obtain speed impossible in software. These humans-operators will be researchers like me and my graduate students, undergraduate and high-school students, and also the tele-visitors from all over the world, who will play roles in plays performed in the robot theater. It still remains to be decided what plays will be performed, but perhaps classical tragedies and commedies will be more influential than a cabaret. For instance, we will try to adapt the myth of Prometheus to our environment.

The goal will be to involve people around the world to think about the fundaments of collaboration, conflict, cooperation, egoism, altruism, movement, dance, speech, recognition, interaction, immitation, group behavior, myth, theatre, art, and creativity.

Nobody yet proposed to create a ROBOTIC THEATER on WWW. There exist few puppet theaters with robots as puppets [Ullanta00, MUSEUM]. There are single robots connected to WWW [USC], but there is no robot theatre on WWW. We will call it the OREGON CYBER THEATRE. Let us be brave enough to try this new idea - and observe what will emerge.

Oregon Cyber Theatre will be composed of:

- A. Robots-puppets located in interdisciplinary Intelligent Robotics Laboratory at Portland State University (Suite FAB 70).

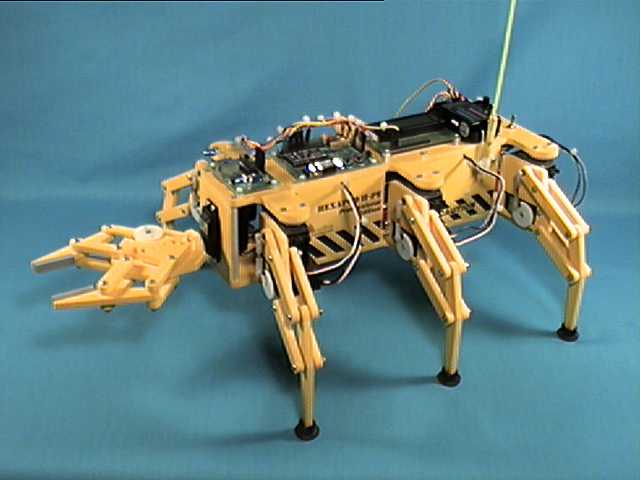

- B. Cameras and sensors located on the puppets (for instance in their eyes, see Figure 1.)

- Computer controlled cameras, Figure 2, for passive observers will be located in various locations

in the room.

Figure 1. Walking Hexapod Spider with a camera.

Figure 2. Computer Controlled camera using OWI arm built from a kit. Such camera can be build for less than $ 100 in year 2000.

- C. Microphones and other sensors in the physical theatre.

- D. Computers in the lab controlling the robots by radio, tethered or directly. They will range from laptops to special-purpose FPGA-based supercomputers. Movement control, learning, image processing, natural language/speech software, and AI software will be installed on these computers. This software will be developed at Portland State University (PSU), Oregon Graduate Institute (OGI) and by our external collaborators. All computers will be linked to WWW.

- E. Global recording mechanisms of what happens on the scene. All control decisions, events, images, sounds, sensor readings, etc. will be recorded as a base for further protocol analysis and learning processes.

- F. Computers linked to WWW in Internet tele-sites.

- G. Role-playing software at tele-sites, WWW-linked to our software controlling the puppets and the scene (lights, scene rotations, etc).

- H. In the next phase, cameras located in tele-agent sites. Thus such camera can look at a person in Honolulu and replicate her movements to our spider or dog puppet (the "avatar concept" well-known from multimedia and video-animation systems).

- I. In the next phase, microphones and sensors located at tele-sites. Persons will use their own body movements and voice to act, this will be transformed to the movements and voices of robotic puppets.

Our plan is first to build 8 radio-controlled spiders with grippers and cameras. We will be thus able to observe and demonstrate some simple societal emerging phenomena. Having eight spiders will allow us to designate four of them as males and four as females, two couples in a "country". This will allow to perform the plays and observe emerging phenomena such as: duel, war, love, sexual reproduction, creation of families (polygamistic and monogamistic), collaboration, competition, emergence of hierarchy, belief and morality. Truth telling and lying robots. Cheating and honest workers. Ten Commandements adapted to robot-spiders miniworld versus Three Robotics Laws of Asimov.

Next generation of robots will be "Hexapod Centaurs", build in scale 4:1, with six legs for better stability and strength, but with "human-like" upper body - head and hands. This will allow to extend the repertoire of plays and games.

Figure 3. A radio-controlled Basic Spider with a gripper.

Currently we have one fully operational walking robot only; a Spider, Figure 1. The next one will walk in few days. Two more spiders will be ready in summer of 2000. Next we will add more dogs, cats, horses, spiders, turtles and other animals. We will build animated humans, but they will be not walking. These robots will be stationary or wheeled (perhaps some in wheelchairs).

Many robots will be built by converting Halloween items, toys, mannequines and other existing items, Figures 4, 5 and 6. After-holiday sales provide oportunity to purchase such items at a fraction of their original price, which is a real bargain for robot enthusiasts. Some other are built from commercially available kits and upgraded (Figure 7). Many excellent robotic toys are fabricated in China and Japan, they will be also used after computer interfacing and mechanical modifications, Figure 8.

Figure 4. Talking and dancing bears.

Figure 5. Halloween Skeletons. This 10$ (on sale) toy can be converted to a talking and moving robot.

Figure 6. A variety of heads that can be converted to talk arbitrary text by replacement of their EPROMs with parallel port interface to PC.

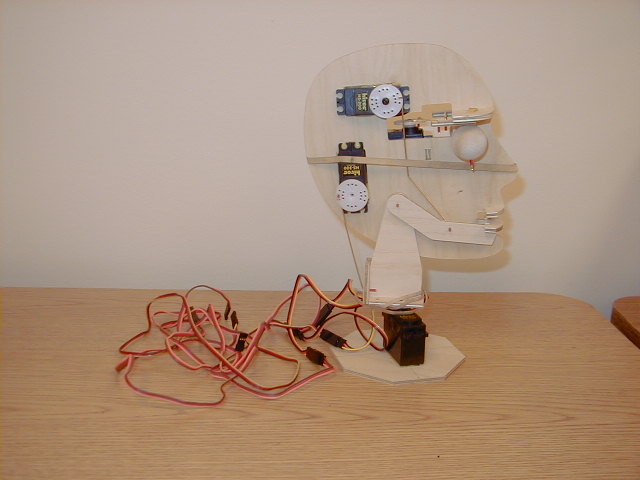

Figure 7. A talking head with Servo motors.

Figure 8. A Furby toy without her fur. Interface added.

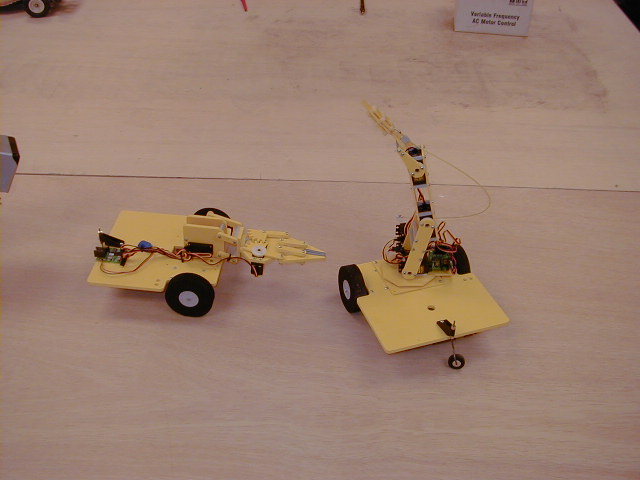

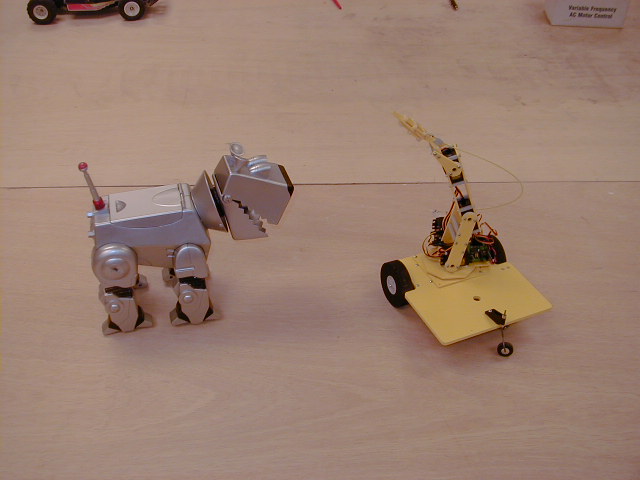

There will be also mobile robots on wheels, Figures 9 and 10. Concluding, not every play can be realistically played in the coming few years. We need to find a writer to write a play for the "actors" shown here and others that we have. On the other hand, it may be interesting to play the ``Romeo and Julliet'' with spiders and dogs, or human-like-robot-actors in wheelchairs or on tricycles.

Figure 9. A battle of wheeled mobile robots.

Figure 10. Dog does not like the mobile arm.

Our robots will have certain degree of autonomy and certain degree of tele-operation. The autonomy will include the non-deterministic rule-based systems and emergent behaviors based on Finite State Machine Distributed agents. Hardware-realized random number generators will be used in them. So definitely, their autonomous behavior will be not predictable, although it will be constrained to a certain degree. You do not know which path the robot will take to omit an obstacle, but you can predict that it will try to do this and will not fly above. This way, for instance, additional conflicts or funny situations may emerge in plays.

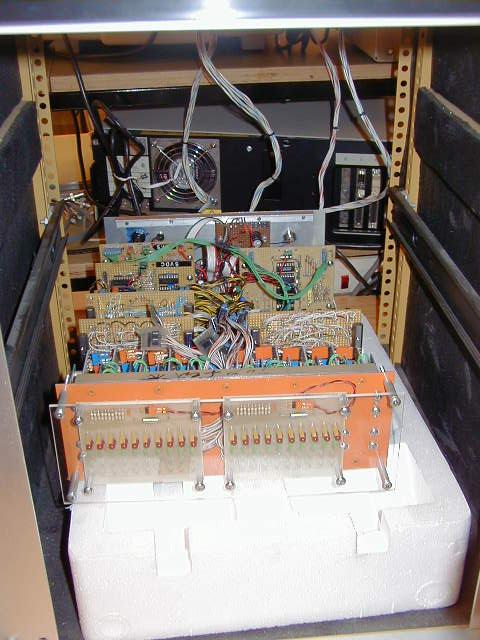

The tele-operation will be radio-connected to the control/transmission computer linked to Internet. "Brains" of more complex robots, such as the MUVAL (MUltiple-VAued Logic robot, reasoning in multiple-valued logic), will be constructed as "societies of agents", Figures 11, 12 and 13. Each agent will be either autonomous or controlled by a human located somewhere on the Internet. A person from Singapore could control the right hand and a person from Hawai the walking gaites. The voice will come from the memory or it will come, say, from Hungary. Thanks to Internet technology, all the software recognition-processing-generating software can be distributed world-wide.

Figure 11. A MUVAL robot (from the left). Will we find collaborators to improve his (i.e. Muval's) appearence and intelligence?

Figure 12. Closer look at the interface between MUVAL and PC.

Figure 13. Permanent competition for Muval's head: a head designed by Mateusz Perkowski at the cost of $40 in year 2000 dollars. We predict that complete computer-operated heads under $ 20 will come from the industry in 2001.

Figure 14. Pneumatic technology, you can see the artificial muscles at the right and PC interface with valves at left.

In addition to electric control, our robots will have pneumatic control based on inexpensive artificial pneumatic muscles, a new inexpensive technology developed in last few years, Figure 14. We experiment also with inexpensive hydraulic technologies based on pistons and syringes, and we find them easy to use and very promising for robot theater applications.

The performance will be partially organized, like playing Shakespeare, but the actors/agents may deviate from the text, something non-expected can happen, or some tele-agents will be missing, so they will be replaced by automated software robotic-agents. This theatre will be a permament Turing test for all its human participants and observers.

Humans in Lab 70 and on Internet will play roles of observers(audience) and/or participants (actors, agents). If you will play the role of the spider, you will see the view of the scene as seen by the camera in the eyes of the spider walking on the scene surface. If you will be a bird or an angel, you will see the scene from the above, but your body will be not seen by the audience.

In the future plays, you, the tele-operator, can be a human-robot, an animal-robot, an alien, a mushroom, a plant, a dragon, an angel, a machine. True big industrial robots will be next incorporated to change the scene and play the roles of giants.

In addition to dramas and commedies, dances and vocal performances by robots, we will organize educational seanses. For instance, Figure 14 presents a setup with a Professor's Head, who explains the robot test technology to students.

Figure 14. The entire view of the Rhino Robot in a setup for automatic test and fault location with self-repair. In the first plan you see the conveyor belt with the board for test/self-repair. On the right there is the Professor's Head that will explain the project to students in English.

VIII. SOFTWARE.

Our software will unify several models, especially models of learning, that are known in various areas. For instance we will use all software developed for logic synthesis [Perkowski99,Perkowski99c,Alan], as a base of learning. As examples, functional-decomposition-based learning will be used for:

- recognition of objects in image: human faces, other robots, obstacles, inanimate objects.

- recognition of objects in movement: learning to walk, information from leg sensors, compass, sonars, other senors.

- recognition in which objects are situations; learn how to behave.

(this is done using a higher-order relational data descriptions, created automatically

based on lower level processes.

Thus our learning software will use all stages of language L problem/constraint/environment description, its convertion to non-deterministic finite state machines, decomposition, minimization and encoding, as well as functional decomposition and minimization of multi-valued functions and relations. They will serve to describe, optimize and implement the robot behaviors in FPGA hardware. We will follow here the analogy of creating behaviors as compositions of simple and complex agents - state machines, that can be build using the most advanced synthesis methods rather than the Genetic Algorithm methods as described in [DeGaris00], [DeGaris00a], [Miller99]. Although we are using here the extensions of many methods from classical logic synthesis, observe that because of unknown values, noise in data, relational rather than functional description of data, non-determinism, very high percent of don't cares, the need for discretization of input data, uncertain nature of results, and other properties typical for machine learning and data mining but absent in classical synthesis, our approach calls for new logic synthesis theory and algorithms. We will generalize multiple-valued logic synthesis towards general probabilistic, nondeterministic, continuous, and fuzzy functions and relations, as well as to reversible and quantum logic [Kerntopf00]. The theory should apply to very large functions, relations and machines, take into account noise, unknown values, and generalized don't cares such as is in relations [Perkowski97,Files98,Files98a]. Therefore, it will be based on implicit problem representation [Mishchenko00]. The new decomposition theory will be very general and will include not only our previous generalizations to Ashenhurst/Curtis Decomposition but also new decomposition based on the Reconstructurability Analysis [Zwick00]. Similarly to previous logic design and machine learning theories, it should use Occam Razor Principle as its fundament. We will extend to this new logic the new information-theory based approaches to multi-level logic synthesis and state machines, such as those from [Popel00] and [Jozwiak00].

This new theory will be oriented towards Reconfigurable Hardware and specifically, the Learning Hardware. It should be geared towards either the non-hardware realizations such as realization of decomposed netlists by Prolog-like rules and fuzzy rules, or the newest hardware technologies based on multiplexed FPGAs such as Virtex of Xilinx. (Recall, that we treate Learning Hardware as a generalization of Evolvable Hardware in which any learning method is realized in reconfigurable hardware, rather the darwinian genetic algorithm only.)

Thus, we postulate creation of new logic synthesis theory.

The developed by us partial automata will be of two types: some will correspond to characteristic behaviors that are highly automated in animals, such as walking or eating.

The other will be various learning engines realized in hardware. So far, we realized the Cube Calculus Machine [Sendai92], the Functional Decomposition Machine and the Rough Set Machine [Euro-Micro99]. We know of course that there is no Cube Calculus Machine in our brain, but we realize it for our robot's brain as an efficient method to solve combinatorial problems that occur in robot's vision and learning (such as graph coloring or matching.) Whether actual brain works like this or not, is irrelevant. Actual brain does also not work using GA or NN metaphors, either. So, as I wrote, no model can claim to be any "more true" than the other. Let the best metaphor win a stage.

Various voting and agent-like behaviors will be used to combine the machines, but we agree that our approach is weak here, as are also the other. We count on our technology's hardware speed, and also on our implementation of new ideas taken from game theory. The construction of the "brain" will be hierarchical and heterarchical, based on many levels of voting and competing behaviors. The lowest levels will be highly automated for speed and efficiency. The lowest level, the Movement Control, will relate to spider's ability to walk straight forward, backward, turn left, right, sit on its back, to bend the knees, to "lay dead", walk, dance, avoid small obstacles, climb the stairs, hobble along, etc. All these behaviors will be prespecified and preprogrammed, but their combinations and variants will be emergent. Part of the lowest level control will be in the microcontroller on robot's body, part in FPGA boards of the radio-connected PC, and part in its software. In our sollipsistic approach, all sensors, switches and effectors will be doubled by software data structures which will create and receive symbolic information for the robot's brain. Thus going from real to simulated worlds and vice versa will be easy, and internal models that robot may have about its environment may be compared with the real data during interaction with the environment.

Higher level behavior layer will include the basic behaviors and scenarios in the world of robots, that can however be highly unstructured. They will include: avoidance of large obstacles requiring planning, path and movement planning (also in the presence of unfriendly moving obstacles), duels and fights, copulation and love scenes, food collection (batteries) and eating, child raising, sleeping and rest, entertainment. The first variant of a program that combines ready search scenarios with Genetic Algorithm used to select the best program in the space of programs is described in [Dill00].

There will be a separate system for image processing and vision. It will use the developed by us previously standard image processing software, based on line detection and shape recognition using various Hough and other Transforms. The typical applications include ball recognition for "soccer-like" games, sword recognition for duels, other robot recognition for all social behaviors, and human face recognition for demos ("where does my teacher stand?")

A complete speech recognition/natural language/speech generation software from Oregon Graduate Institute will be used, with no modifications in the first phase of research. This will allow in the first phase the humans to control robots by sound commands, and learn about spiders "emotions", "states of characters" and "chromosomes". In the next stage this technology will be also used for robot-robot communication. Again, typical language/speech-generation scenarios will include: singing; speech generation representing emotions; robot, animal, alien, and human voices and expression styles; voice acting techniques of a human theater.

Our robots will be highly emotional. It means, the emotion modeling system will be central in their brains and will globally affect operation of all subsystems. Rational and irrational behaviors will be competing on the free-market of the society of mind; the black-board architecture. The state of the character of each agent will be described by a vector:

[energy level, maturity level, hunger satisfaction, sexual instinct satisfaction, social acceptance satisfaction, power satisfaction, moral self-satisfaction, intelectual satisfaction]

Highly complex equations, partially human-created, partially evolved, will use cellular automata, fuzzy dynamic logic [Buller00] and game theory models leading to dynamics of chaos, immediate mood changes and other emergent phenomena. The state of the society is described by the Cartesian product of states of its members. The highest controlling computer can play the role of God of Spider's World, analysing the dynamics of the general vector and globally broadcasting some parameters such as behavior-releasing thresholds. These phenomena are known to control societies of ants or termites.

Social behaviors of the spider society will include the mechanisms that are the fundament of animal kingdom: fight for survival and seeking for food, as well as sexual reproduction. Food will be simulated by batteries for which the robots will be seeking when hungry. They may choose to fight for the batteries or cooperate in providing themselves with batteries. Similarly, monogamic or polygamic families may emerge. Sexual reproduction will be simulated by crossover algorithm; the closely located and positioned robots of opposite sexes will exchange the electrical codes of their chromosomes, modeling the Genetic Algorithm. This will create a chromosome for a new robot mind, which will be radio-transmitted to one of the previously idle robots. This robot will know its parents and will be now subject to their education. The observers will be able at any time to perform software vivisection, to learn and visualize on their computer screens the emotion vectors and the chromosomes of any robot. Aging process will be simulated by decreasing energy levels with time and battle injuries as seen by sensors. When the energy level decreases below some threshold, the robot dies, it means it is send physically to the pool of idle robots, waiting for its reincarnation after a following sex act of some of the surviving robots. Only robots with certain values of energy level and other parameter levels are allowed to reproduce. The emergent behaviors will include duels and fights, structured or not, between the spiders. Some kind of ritual behaviors typically associated with war, marriage and family may emerge. The robots will be able to create coalitions to achieve goals, these coalitions will include food seeking, families, countries, and armies. This will require adapting the known theories of coalition and conflict, mostly based on game theory, to the programming of the spider society. Both zero-sum and non-zero sum games will be programmed, and the interesting phenomena that happen on their borders and their inteplay will be simulated and analyzed. The weights in the game matrices will be permanently updated to reflect changing emotions of spiders. The role of communication between partners of non-zero games will be investigated [Wright99]. We expect that many phenomena such as coalition forming, cooperation and competion will be observable. We expect also to be pleasantly surprised by what may happen and we cannot predict now.

Recent research on axiomatic morality uses models from game theory, automatic theorem proving, knowledge-based reasoning, higher-order logic, and constraints programming [Danielson92]. We will program all the known models, in Prolog, Fuzzy Prolog and new constrained-programming and inductive programming languages, as the highest level of spiders' society control. Next, we will experiment with emergent behaviors, and emergent software creation by robots. The moral codes will first include Asimov's Three Laws of Robotics, but soon we will enhance them by simplified Ten Commandements or other highly abstract laws - higher order logic rule sets, adapted to spiders' conditions. The laws will be taken from books on ethics, temporal logic, multivalued logic, verification theory and various continuous and modal logics [Hajnicz]. No attempt at consistency of the global logic system of any of the robotic agents or societies will be taken. Let the emergence decide if logical spiders have higher chance of survival.

Although we put so much emphasis on emotions and emergence, the role of Internet and controlling humans cannot be neglected, especially in the first phase. The collection of data about robot movements, behaviors and interactions, that will come from human-controlled keyboards, joysticks and microphones, will be collected and stored for reuse. The system will automatically create the evergrowing repertoire of future theater plays, robot interactions, games and life in form of stored assemblies of control signals and associated sounds. In addition, the users will also send through the WWW ready controlling scenarios of plays. One can conclude that in the first phases the WWW technology to be used in the theatre will be quite similar to the one used in WWW chat rooms. We will observe what are the human preferences towards expected and preferred robots' behaviors, what the observers want to play in our theater and what do they feel about it. So far, I found that people want to construct and see "robot sex and violence" as well as competitive behaviors such as battles and sport competitions, rather than robot intellectual behaviors. Instead to be scandalized, let us remember that "Romeo and Julliet" or "King Lear" can be also characterized as "sex and violence". Thus, as it is in the true art, let us try to use the vehicle of theater to emerge the angelic parts of spiders' souls above their animal natures, in order to appreciate the mystery of life.IX. CONCLUSIONS

This paper is the first in series about Oregon Cyber Theatre. The ideas of the theater and the robots design will be presented in more detail in the forthcoming papers. In particular, future papers will cover robot construction, image processing software, machine learning, agents, modeling of social behaviors and emergent morality, and WWW interfacing. The reader interested in more technical details should consult the literature given below.

I proposed here a long-term research project and a world-wide invitation to collaboration. We plan to find researchers and enthusiasts with all kinds of skills, talents and interests; people with writing/directing, robot-building, psychology, biology and many other backgrounds. For instance, we look for somebody who understands well behaviors and movements of spiders, or social behaviors of insects.

X. ACKNOWLEDGMENTS

I would like to thank Martin Zwick and Alan Mishchenko for stimulating discussions, and to Alan Mishchenko, Craig Files, Stanislaw Grygiel, Karen Dill, Michael Levy, Anas Al-Rabadi, Rahul Malvi, Kevin Stanton, Tu Dinh and others, for writing software. Finally, hard work of Robo-Club and Electric Horse groups and especially Bryce Tucker and Jeff Ratcliffe should be mentioned. Michael Levy helped also to improve this text.

I would like to acknowledge grants from Intel Corporation, Portland State University Foundation, Deans Office, and Provost funds. Also equipment donations from Tektronix Inc., Seiko Robots, Xilinx, Altera, and private donors.

Creation of this laboratory would not have been possible without many helps and encouragements from Doug Hall, the Interim Chair of ECE. Children hospitals and high-schools in Oregon may request our visit and robot demonstration.

LITERATURE

- [Abu-Mostafa88] Y. Abu-Mostafa (ed.), `` Complexity in Information Theory,'' Springer Verlag, New York, 1988, p. 184.

- [Asimov50] I. Asimov, `` I, Robot,'' Fawcett, New York, 1950.

- [Ashenhurst57] R.L. Ashenhurst, ``The Decomposition of Switching Functions'', Proc. Int. Symp. of Th. of Switching, 1957.

- [Bratko86] I. Bratko, ``Prolog Programming for Artificial Intelligence,'' Addison-Wesley, Reading, Mass, 1986.

- [Brecht67] Bertold Brecht, Radiotheorie (Radio Theory), in: Gesammelte Schriften, Vol.18, Frankfurt/M. 1967, pp.119-134

- [Buller98] A. Buller, ``Artificial Brain. Phantasies no more,'' Proszynski i Ska, Warsaw, 1998, (in Polish).

- [Buller00] A. Buller, ``Dynamic Fuzzy Sets,'' this proceedings.

- [Bryant86] R.E. Bryant, ``Graph-based algorithms for boolean function manipulation, IEEE Transactions on Computers, C-35, No. 8, pp. 667-691, 1986.

- [Codd] E.F. Codd, ``A Relational Model of Data for Large Shared Data Banks,'' Comm. ACM, 13, pp. 377-387.

- [Curtis62] H.A. Curtis, ``A New Approach to the Design of Switching Circuits,'' Princeton, N.J., Van Nostrand, 1962.

- [Danielson92] P. Danielson, ``Artificial Morality, Virtuous Robots for Virtual Games,'' Routledge, U.K., 1992.

- [Dawkins76] R. Dawkins, ``The Selfish Gene,'' Oxford University Press, New Yor, 1976.

- [Dennett88] D. Dennett, ``When philosophers encounter artificial intelligence,'' Daedalus, 117: pp. 283-295, 1988.

- [Dill97] K. Dill, and M. Perkowski, ``Minimization of Generalized Reed-Muller Forms with Genetic Operators,'' Proc. Genetic Programming '97 Conf., July 1997, Stanford Univ., CA.

- [Dill97a] K. Dill, J. Herzog, and M. Perkowski, ``Genetic Programming and its Application to the Synthesis of Digital Logic,'' Proc. PACRIM '97, Canada, August 20-22, 1997.

- [Dill00] K.M. Dill and M. Perkowski, ``Creation of a Cybernetic (Multi-Strategic Learning) Problem-Solver: Automatically Designed Algorithms for Logic Synthesis and Minimization,'' this proceedings.

- [Drexler86] K.E. Drexler, ``Engines of Creation,'' Anchor Press, New York, 1986.

- [Furguson81] R. Furguson, ``Prolog: A step towards the ultimate computer language,'' Byte, 6, pp. 384-399, 1981.

- [Files98] C. Files, M. Perkowski, ``An Error Reducing Approach to Machine Learning Using Multi-Valued Functional Decomposition,'' Proc. ISMVL'98, pp. 167 - 172, May 1998.

- [Files98a] C. Files, M. Perkowski, ``Multi-Valued Functional Decomposition as a Machine Learning Method,'' Proc. ISMVL'98, pp. 173 - 178, May 1998.

- [Files00] C. Files, and M. Perkowski, ``Decomposition based on MVDDs,'' accepted to IEEE Transactions on Computer Aided Design.

- [Files00a] C. Files, ``Machine Learning Using New Decomposition of Multi-Valued Relations,'' this proceedings.

- [DeGaris93] H. DeGaris, ``Evolvable Hardware: Genetic Programming of a Darwin Machine,'' In ``Artificial Nets and Genetic Algorithms,'' R.F. Albrecht, C.R. Reeves and N.C. Steele (eds), Springer Verlag, pp. 441-449, 1993.

- [DeGaris97] H. DeGaris, ``Evolvable Hardware: Principles and Practice,'' CACM Journal, August 1997.

- [DeGaris00] http://www.hip.atr.co.jp/~degaris

- [DeGaris00a] H. DeGaris, his recent book.

- [Hamburger79] H. Hamburger, ``Games as Models of Social Phenomena,'' W.H. Freeman and Company, 1979.

- [Hero] Hero of Alexandria, ``On Pneumatics, Hydraulics and Mechanical Theater''.

- [Higuchi96] T. Higuchi, M. Iwata, and W. Liu (eds), ``Evolvable Systems: From Biology to Hardware,'' Lecture Notes in Computer Science, No. 1259, Proc. First Intern. Conf. ICES'96, Tsukuba, Japan, October 1996, Springer Verlag, 1997.

- [Hillis88] W. D. Hillis, ``Intelligence as an emergent behavior,'' Daedalus, 117, pp. 175-189, 1988.

- [Jozwiak98] L. Jozwiak, M.A. Perkowski, D. Foote, ``Massively Parallel Structures of Specialized Reconfigurable Cellular Processors for Fast Symbolic Computations,'' Proc. MPCS'98 - The Third International Conference on Massively Parallel Computing Systems, Colorado Springs, Colorado - USA, April 6-9, 1998.

- [Jozwiak00] L. Jozwiak, and A. Slusarczyk, ``Application of Information Relationships and Measures to Decomposition and Encoding of Incompletely Specified Sequential Machines,'' this proceedings.

- [Kerntopf00] P. Kerntopf, ``Logic Synthesis using Reversible Gates,'' this proceedings.

- [Kurzweil] R. Kurzweil, ``The Age of Spiritual Machines,'' 1999.

- [Langton89] Ch.G. Langton (ed.), ``Artificial Life: The Proceedings of an Interdisciplinary Workshop on the Synthesis and Simulation of Living Systems,'' September 1987, Los Alamos, Addison-Wesley, 1989.

- T. Lewis, M. Perkowski, and L. Jozwiak, ``Learning in Hardware: Architecture and Implementation of an FPGA-Based Rough Set Machine,'' Proceedings of the Euro-Micro'99 Conference, Milano, Italy, September 1999.

- [Luce57] R.D. Luce and H. Raiffa, ``Games and Decisions,'' John Wiley and Sons, New York, 1957.

- [Maynard-Smith84] J. Maynard Smith, and G.R. Price, ``The Logic of Animal Conflict'', Nature, 246, pp. 15-18, 1984.

- [Michalski77] R.S. Michalski and J.B. Larson, ``Inductive inference of vl decision rules,'' in Workshop in Pattern-Directed Inference Systems, Hawaii, May 1977.

- [Michalski98] R.S. Michalski, I. Bratko, and M. Kubat, ``Machine Learning and Data Mining: Methods and Applications,'' Wiley and Sons, 1998.

- [Michie88] D. Michie, ``Machine Learning in the next five years,'' Proc. EWSL'88, 3rd European Working Session on Learning, Glasgow, Pitman, London, 1988.

- [Minsky86] M. Minsky, ``The Society of Mind,'' Simon and Schuster, New York, 1986.

- [Moravec] Moravec, ``his recent book,'' 1999.

- [Mishchenko00] A. Mishchenko, ``A Breakthrough in Problem Representation: Implicit Methods for Logic Synthesis, Test and Verification,'' this proceedings.

- [Pawlak91] Z. Pawlak, ``Rough Sets. Theoretical Aspects of Reasoning about Data,'' Kluwer Academic Publishers, 1991.

- [Perkowski85] M. Perkowski, ``Systolic Architecture for the Logic Design Machine,'' Proc. of the IEEE and ACM International Conference on Computer Aided Design - ICCAD'85, pp. 133 - 135, Santa Clara, 19 - 21 November 1985.

- [Perkowski92] M.A. Perkowski, ``A Universal Logic Machine,'' invited address, Proc. of the 22nd IEEE International Symposium on Multiple Valued Logic, ISMVL'92, pp. 262 - 271, Sendai, Japan, May 27-29, 1992.

- [Perkowski97] M. Perkowski, M. Marek-Sadowska, L. Jozwiak, T. Luba, S. Grygiel, M. Nowicka, R. Malvi, Z. Wang, and J. S. Zhang, ``Decomposition of Multiple-Valued Relations,'' Proc. ISMVL'97, Halifax, Nova Scotia, Canada, May 1997, pp. 13 - 18.

- [Perkowski97a] M. A. Perkowski, L. Jozwiak, and D. Foote, "Architecture of a Programmable FPGA Coprocessor for Constructive Induction Approach to Machine Learning and other Discrete Optimization Problems", in Reiner W. Hartenstein and Victor K. Prasanna (ed) ``Reconfigurable Architectures. High Performance by Configware,'' IT Press Verlag, Bruchsal, Germany, 1997, pp. 33 - 40.

- [Perkowski99] M. Perkowski, ``Do It Yourself Reconfigurable Supercomputer that Learns,'' book preprint, Portland, Oregon, 1999.

- [Perkowski99a] M. Perkowski, S. Grygiel, Q. Chen, and D. Mattson,

``Constructive Induction Machines for Data Mining,''

Proc. Conference on Intelligent Electronics,

Sendai, Japan, 14-19 March, 1999.

Slides in Postscript. - [Perkowski99b] M. Perkowski, R. Malvi, S. Grygiel, M. Burns, and A. Mishchenko, ``Graph Coloring Algorithms for Fast Evaluation of Curtis Decompositions,'' Proc. DAC'99, June 21-23 1999. (DAC 99). New Orleans, LA, USA, June 21-25, 1999. PowerPoint presentation

- [Perkowski99c] M.A. Perkowski, A.N. Chebotarev, and A.A. Mishchenko, ``Evolvable Hardware or Learning Hardware? Induction of State Machines from Temporal Logic Constraints,'' The First NASA/DOD Workshop on Evolvable Hardware (NASA/DOD-EH 99). Jet Propulsion Laboratory, Pasadena, California, USA, July 19-21, 1999.

- [POLO00] PSU POLO Directory with DM/ML Benchmarks, software and papers: http://www.ee.pdx.edu/polo/

- [Popel00] D. Popel, S. Yanushkevich, M. Perkowski, P. Dziurzanski, V. Shmerko, ``Information Theoretic Approach to Minimization of Arithmetic Expressions, '' this proceedings.

- [MUSEUM] Robot Museum Theatre, html

- [Rowe88] N.C. Rowe, ``Artificial Intelligence Through Prolog,'' Prentice Hall, Englewood Cliffs, N.J. 1988.

- [Stanton90] K.B. Stanton, P.R. Sherman, M.L. Rohwedder, Ch.P. Fleskes, D. Gray, D.T. Minh, C. Espinosa, D. Mayi, M. Ishaque, M.A. Perkowski, ``PSUBOT - A Voice-Controlled Wheelchair for the Handicapped,'' Proc. of the 33rd Midwest Symp. on Circuits and Systems, pp. 669 - 672, Alberta, Canada, August 1990.

- [Steinbach99] B. Steinbach, M. Perkowski, and Ch. Lang, ``Bi-Decomposition in Multi-Valued Logic for Data Mining,'' Proc. ISMVL'99, May, 1999.

- [Turing53] A. Turing, ``Computing Machinery and Intelligence,'' Mind, LIX (236), 1953.

- [Ullanta00] Ullanta Performance Robotics, html

- [Vuillemin96] J. Vuillemin, P. Bertin, D. Roncin, M. Shand, H. Touati, and Ph. Boucard, ``Programmable Active Memories: Reconfigurable Systems Come of Age,'' IEEE Trans. on VLSI Systems, Vol. 4, No. 1., pp. 56-69, March 1996

- [Warrick80] P. Warrick, ``The Cybernetic Imagination in Science Fiction,'' Cambridge, MA: MIT Press, 1980.

- [Wright99] R. Wright, ``Non-Zero: The Logic of Human Destiny,'' Pantheon Books, 1999.

- [Zwick00] M. Zwick, ``Reconstructurability Analysis Approach to Data Mining,'' this proceedings. NORVIG the Genetic Algorithm methods as described in [Miller99].